Asymmetric key cryptography is also employed to develop digital signature schemes, i.e., a digital counterpart of classic signature on paper. There are some important features of classic signature that deserve to be discussed in order to understand which are the basic expected properties of any good signature scheme.

- Classic signature is physically part of the signed document. In a digital world this requires a mechanism the avoid that a signature can be just cut-and-pasted to any different document. To achieve this, we let the signature depend on the signed document: two different documents signed by the same entity will have different signatures;

- Classic signature is verified by comparing it with an official reference signature. This is not possible in the digital world since we have just required (item above) that the signature of different documents should always be different. We thus need a mechanism to verify a signed document with respect to an entity (the signer);

- Documents signed on paper cannot be easily copied. In the digital world we can trivially cut-and-paste bytes so any signed document can be replicated as many times as we need. In certain applications (e.g. e-commerce) signature needs to be integrated with mechanisms to avoid uncontrolled replicas.

Definition

A digital signature scheme consists of two functions:

generates the signature of

using a private key

;

checks the validity of signature

on message

using a public key

. Returns true if the signature is valid and false otherwise.

Similarly to asymmetric cryptography, we require that these two functions are easy to compute knowing the keys. Moreover, it must hold .

For what concerns security, we require that without knowing the private key it is computationally infeasible to find a message and a signature

such that

holds, i.e., such that y is a valid signature for x.

RSA-based digital signature (a flawed first attempt)

We now discuss how the RSA cipher can be used to implement a digital signature scheme. We let

Notice that encryption and decryption of RSA are the same function. To sign we rise x to the private exponent, modulo n. To check the signature we rise the signature to the public exponent modulo n, and we check that the result is the same as the original message x.

Problems and attacks

This scheme is not yet satisfactory as it does not satisfy the above security property. We show different ways to find x and y such that .

Forging a “random” signed message: We pick an arbitrary signature y and we compute the corresponding signed message as since

, by definition. Of course the signed message will be meaningless but still it is a valid forged signature and depending on the application it could be accepted as valid (e.g., when the expected message only contains a number).

Multiplying signed messages: If we have two signed messages x1, x2 with signatures y1 and y2, then y1 * y2 mod n is the signature of a message x1 * x2 mod n. Again, if the expected message is just a number with no particular format or padding, this attack might be very effective.

In addition to the discussed forging attacks, the above defined signature has different drawbacks:

- The size of the signature at least the same as the size of the message meaning that we have to send at least double the size of signed data;

- If a message is bigger than the RSA modulus we would need to split the message into blocks and use some encryption mode to encrypt the different blocks. Recall, however, that none of the encryption modes we have discussed for symmetric ciphers provide a satisfactory level of integrity. This would imply possible attacks in which both the message and the signature are manipulated;

- Asymmetric cryptography is much slower than symmetric one. Even adopting variants of encryption modes with strong integrity properties we would need to perform many encryptions to sign a message, and this could become unaffordable for long files.

The solution to all of these issues, including the problem of forging, is the adoption of cryptographic hash functions. A hash function is a function taking an arbitrarily long message x and giving a digest z of fixed length. When used in cryptography, these functions are required to satisfy specific properties that we discuss below through our running-example.

RSA-based digital signature (hash-based)

We modify our proposed signature scheme based on RSA so to prevent all of the discussed problems. Let be a hash function.

Thus, to sign x we decrypt its hash under the private key. To check the signature we encrypt the signature under the public key, we recompute the hash on x and we compare the two results.

Since the hash is of fixed length, it is enough to take it smaller than the RSA modulus to solve all the issues related to the size of the signature and the necessity of encryption modes: a signature would always be as long as the RSA modulus and will always need just one RSA encryption (plus the hash computation).

Forgery is more delicate. Consider the simplest attack (in the scheme with no hash) where, given an arbitrary signature y we compute the corresponding signed message as since

, by definition. In the hash-based scheme,

would provide the hash of the signed message, so if we are able to find x such that

we will have that

. This leads to our first property for cryptographic hash functions.

Preimage resistance

A hash function h is preimage resistant (or one-way) if given z it is infeasible to compute x such that h(x) = z.Example: We consider a simple hash function h(x) that splits message x into blocks of a fixed size k and computes

, i.e., the bit-wise xor of all blocks. This hash function is clearly not preimage resistant. given a digest z we can easily find preimages. For example,

where 0 notes the block of k zeros and || notes the concatenation of blocks, are all correct preimages of z.

Notice that preimage resistance also prevents forging based on RSA multiplicative property. If we have two signed messages x1, x2 with signatures y1 and y2, we have that y1 * y2 mod n is the signature of a message whose hash is the same as z = h(x1) * h(x2) mod n. Finding such a message x means that we can compute a preimage x of h such that h(x) = z, but this us ruled out by preimage resistance.

Thus, it appears that adopting a hash-based RSA signature scheme in which the hash function is preimage resistant solves all the issues. Unfortunately, we also have to consider potential problems deriving from the adoption of hashes: since a hash summarises messages as fixed-length digests, it can of course occur that different messages have the same hash. For example we might have different x1 and x2 such that h(x1) = h(x2). Then, , i.e., the two messages have the same signature.

Consider the following attack scenario: given a message x1 and its signature y1, the attacker computes a x2 such that h(x1) = h(x2). Then, y1 is a valid signature for x2 meaning that the attacker has forged a signature for e message of his choice. To prevent this problem we require the following property.

Second-preimage resistance

A hash function is second-preimage resistant if given x1 it is infeasible to compute x2 such that h(x1) = h(x2).

If we assume the attacker is allowed to ask for signatures (similarly to what happens in a chosen-plaintext attack) it might still happen that he chooses two different messages x1 and x2 with the same hash and asks for a signature on x1. In this way he obtains a valid signature for x2. To exemplify, suppose he manage to generate two messages that look the same but one (x2) gives more advantage than the other (x1), such as in two contracts with different prices. The attacker convinces the other party to sign x1 which looks reasonable but obtains a signature on x2 that has an outrageous price in it. For this reason, the ultimate ideal property for hash function is as follows:

Collision resistance

A hash function is collision resistant if it is infeasible to compute different x1 and x2 such that h(x1) = h(x2).

Differently from Second-preimage resistance, here the attacker can choose both x1 and x2 and he is not given a x1 that he has to find a second-preimage of. Thus this latter property implies the previous one, i.e., if a hash is collision resistant it is also second-preimage resistant.

It is possible to prove that collision resistance also implies preimage resistance. Thus, if a hash function is collision resistant it has all of the above mentioned properties. This result holds under the assumption that the number of messages we can hash is at least twice as the number of digests.

Theorem. Let be a collision resistant hash function such that

. Then

is also preimage resistant.

Proof. We prove the following equivalent fact: if is not preimage resistant then

is not collision resistant. To do so, we show that given an algorithm Invert(z) for inverting

(that breaks preimage resistance) we can write a Las Vegas probabilistic algorithm that finds a collision. The algorithm is, in fact, rather simple: We pick a random message, we compute its hash and we invert it using the given algorithm Invert(z). If we find a different message we are done otherwise, if we are unlucky and get the initial message, we FAIL.

FindCollisions:

choose a random x1 in X

z = h(x1)

x2 = Invert(z)

if x1 != x2:

output(x1,x2)

else:

FAIL

Correctness of solution is obvious since x1 and x2 have the same hash meaning that they are a collision. We now prove that failure happens with probability at most 1/2. Since we have possible digests, we have that Invert(z) returns exactly

preimages, one for each digest, and these are the ‘unlucky’ cases, i.e., the messages that once hashed will be mapped back to themselves. Thus the good cases are exactly

and the probability of success is

. As a consequence we fail with probability

. As any Las Vegas algorithm, this can be iterated as needed. For r iterations we have that the probability of failure is

.

Example: Hash h(x) of previous example is clearly not collision and second preimage resistant. Given we have, for example, that

has the same digest. We can swap blocks (xor is commutative). We can add zero blocks. We can add whatever block twice or add it once and then add it xored with the original message, and so on. We consider another simple hash function

, i.e., we use the previous hash to obtain a key for a cipher and we encrypt the constant 0 under that key. This hash function is preimage resistant if the adopted cipher is resistant to known plaintext attacks. In fact, finding h(x) from g(x) corresponds to breaking the cipher knowing the plaintext 0 and the ciphertext g(x). However, it is neither collision resistant nor second preimage resistant, as collisions on h are collisions on g and we have shown above that h is not collision resistant.

Birthday attack

We have discussed important properties of hash functions that are needed, for example, when developing a hash based signature scheme. Collision resistance is the strongest of such properties: it is important that hash functions are carefully designed so to make the computation of collisions infeasible. As for cryptography, however, the attacker can try to break a function by brute force. For the case of hash function, brute forcing is much simpler than expected thanks to the so called Birthday Attack.

This funny name comes from an analogy to the famous birthday paradox: given a group of only 23 people with probability 1/2 there are at least two with the same birthday (day and month, not year). With a group of 41 the probability is already around 90%. This true fact is so counter-intuitive to be called paradox.

The analogy with cryptographic hash functions is immediate: birthday can be seen as a hash function from any person to a fixed-size set of 365 days of the year. Two people having the same birthday represent a collision on the hash function. The fact collisions are so likely means that brute-forcing a hash function might be much easier than expected, and we will give a precise estimate of this.

Let us now show where the ‘paradox’ comes from. We assume people being mapped to birthdays in a uniform way. This assumption fits very well with cryptographic hashes as they usually behaves that way to make finding a collision or a preimage as much hard as possible. We now compute the probability that in a group of k people none share the birthday. For the first person any birthday is fine. Thus we have probability 1 of success. The second person should not share the birthday with the first one, meaning that we have probability 364/365 of success, and so on. We require that these events all occur together giving a probability of . Consequently, the probability of a collision is

. The following code computes this probability:

from decimal import *

def Birthday(k):

r = 1

for i in range(0,k):

r=r*(365-i)

return 1 - (Decimal(r)/Decimal(365**k))

For example:

>>> print Birthday(23)

0.5072972343239854072254172283

>>> print Birthday(41)

0.9031516114817354017392885072

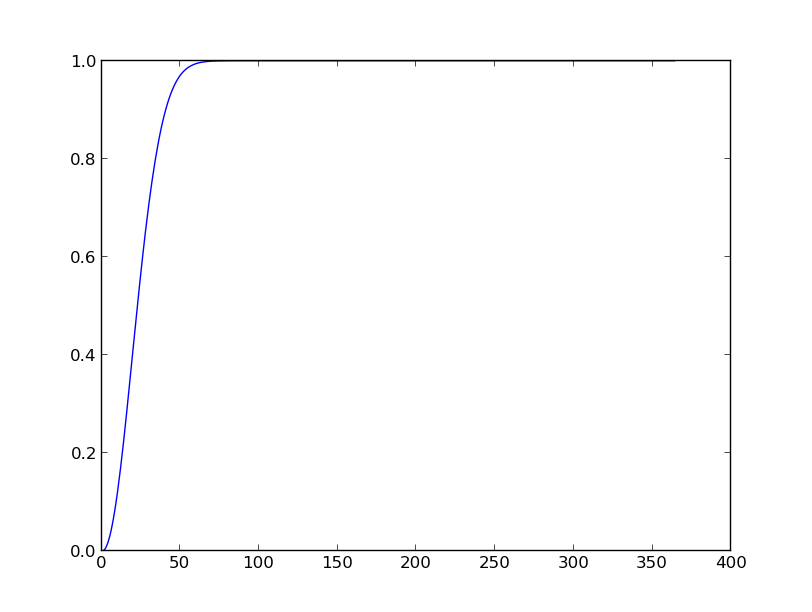

Plotting over all values from 1 to 365 shows how fast the probability grows:

It is now useful to find a relation between the number giving probability 1/2 (in this case 23) and the number of digests, as this will allow us to estimate the cost of a brute force attack. We let n be the number of digests (365 for the birthday paradox). We reason as follows: the probability that we do NOT find a collision is . Now from analysis we know that, for small x,

(from Taylor series

). Thus the probability of finding a collision is

. Thus

. We thus get

. Thus

. By a further approximation (disregarding k) we get

.

For this gives

. Thus, a brute-force attack on a hash functions with n digests finds a collision with probability 1/2 after about

attempts. For example, if a hash function returns digests of 128 bits it takes about

trials to break it. So to have the same security we have with a 128 bit cipher we need double the length, i.e., 256 bits of hash.

Commonly used hash functions

One of the most famous cryptographic hash function is MD5 (Message Digest 5), by Ron Rivest (the ‘R’ of RSA). It is a 128-bit hash used in many applications. Recently it has been shown to be not collision resistant. Moreover the relatively small size allows for a birthday attack in ‘only’ steps.

The most common alternative to MD5 is SHA (Secure Hash Algorithm). The second generation SHA (SHA-2) has a variable digest size from 224 to 512 bits. It is not vulnerable to the collision attacks of RSA and the length makes birthday attack infeasible.

The National Institute of Technology (NIST) has just concluded the selection of SHA-3, with a process similar to the one for selection AES. The winner is Keccak.

Message Authentication Codes

We conclude by illustrating cryptographic mechanisms to achieve authentication using symmetric keys. Message Authentication Codes (MACs) are hash functions with a symmetric key. They produce a fixed-size digest of a message, whose value depends on the given key. The property we require is similar to what we asked for signatures: without knowing the key it should be computationally infeasible to find a message

and a MAC

such that

, i.e., such that y is the MAC for x under key k.

The MAC is checked by recomputing it and comparing with the received one. For example consider:

After receiving the message, Bob recomputes

CBC-based MAC. A simple example of how to implement a MAC is by using CBC encryption mode (with a zero IV):

We than define

Suppose to have a 1-block message and the relative

. We have that

. Thus if the attacker asks (chosen-text attack) for the MAC of

he obtains a MAC for the two-blocks message

.

The following less-trivial forgery allows for more control on the attacked message. Consider two 1-block messages with their MACs and

. Let

and

. Then

Thus, if the attacker wants to extend message with an arbitrary second block

, he can ask for the MAC of

. The obtained MAC will be valid for message

.

To prevent these forgeries, the ISO/IEC 9797-1 standard adds additional transformations to the final encrypted CBC block. For example, given an additional key we define

. Changing the last step is a way to avoid that MACs can be forged from MACs of shorter messages, as done above. As an exercise, you can check that the above attacks do not work under this variation.

Hash-based MACs Another way to implement MACs is to base them on cryptographic hash functions. The most famous is called HMAC, by Mihir Bellare, Ran Canetti, and Hugo Krawczyk (see also RFC 2104).

The idea is to iterate a given hash function h over blocks of B bytes. We typically take B as 64 bytes, i.e., 512 bits. We let

= the byte 0x36 repeated B times

= the byte 0x5C repeated B times

where is obtained from

by appending 0 up to the byte length B. Interestingly, the authors show that if an attacker is able to forge HMAC that he is also able to find collisions on the underlying hash function (even when fed with a random secret as done here). Thus, if the hash function is collision resistant than HMAC is unforgeable.

MACs vs. signatures. What is the difference between MACs and signatures given that they seem to provide very similar guarantees? To understand this crucial issue think of Alice sending to Bob a contract . To prove authenticity (the message comes from Alice) she can decide to either sign it as

with her private key

or compute a MAC as

under a key

shared with Bob. After receiving the message, Bob checks the signature or recomputes the MAC to verify its authenticity. What now if Alice denies to have ever sent

to Bob? In other words, has Bob a way to show to to a third party (a judge) that the contract is from Alice? Here it becomes crucial the symmetry of the key: Alice is the only one knowing her private key

while both Alice and Bob know the shared key

. It is clear that, while signature can only be generated by Alice, a MAC can be easily ‘forged’ by Bob. This important difference can be summarized by stating that MACs never provide non-repudiability and, more specifically, they do not allow to prove authenticity to a third party.